Compositional Methods

Before addressing the composition for each scene individually, it is essential to ensure that the game’s soundtrack feels cohesive. A unified musical experience maintains player immersion while balancing it with unique characteristics for each scene to keep the experience interesting. To achieve this, I plan to use common instrumentation across various tracks, incorporating unique elements for each scene.

The soundtrack will blend orchestral and electronic elements. The orchestral components, controlled by MIDI instruments, align with the game’s historical theme and match the not-so-high fidelity of the game’s visuals. This mix of virtual acoustic and electronic instruments enhances the fantasy world of Mechanical God Saga. Matching the music’s fidelity with the visuals is deliberate; it creates a coherent experience and can positively impact the game, although sometimes not matching the music to the fidelity of the visuals can also work. This discrepancy can be used to have a positive effect on the game, uplifting it. This is sometimes used in cases where the games are developed for smaller devices without so much computer power, which is not the case for our game.

For composing specific scenarios, it is crucial to convey the appropriate emotions for each scene, such as peacefulness, relief, or stress. Understanding the scene’s context and potential events is key to achieve this. Triggers for musical changes can happen at any time, necessitating quick synchronization with gameplay. It is imperative to identify every possible moment in the music where a change might be needed and adjust accordingly to maintain immersion.

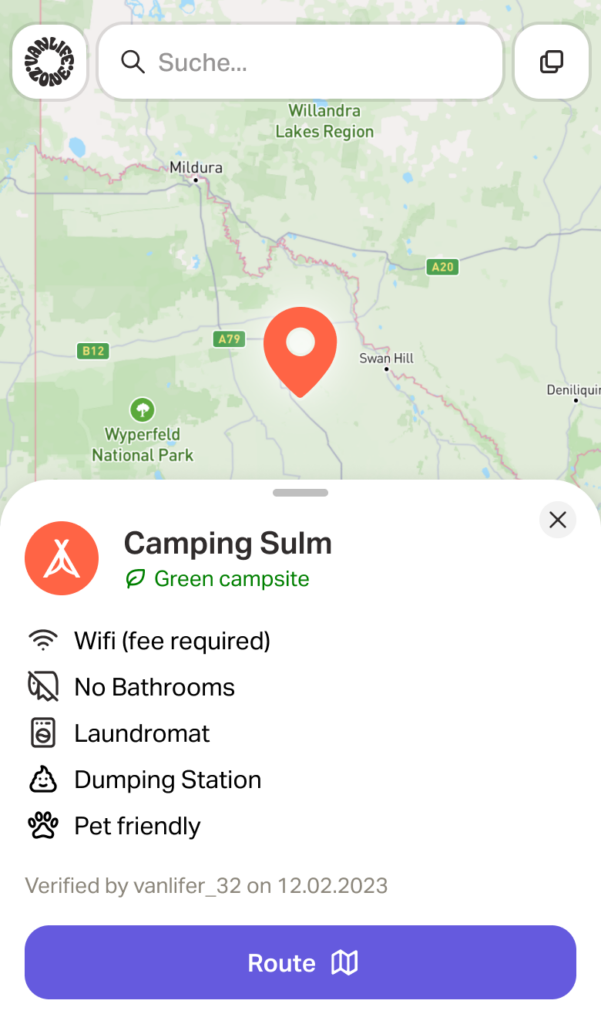

For example, in the forest scene, different musical changes are applied depending on which section of the song is playing when enemies appear. Figure 1 illustrates the initial mapping to achieve a state of tension for this scenario. Other music themes will require multiple states of tension, depending on the evolving situations. However, for the initial forest scene, only a single state of tension is necessary.

By carefully mapping out these musical changes and ensuring quick adaptation to gameplay triggers, the soundtrack will enhance the overall experience of Mechanical God Saga, creating a seamless and immersive journey for players.

The music structure goes as following:

Intro > Segment A > Segment B > Segment C

And for each section there is a need of mapping the changes, as the next table shows, regarding a first state of tension that we want to portray. Synthesized sounds with a metallic aspect are being considered to be associated with the enemy soldiers as for their metallic vests and its connection to the mystery of the nuclear event. As the structure is not linear, the structure mentioned above refers to the way it was composed. Different sections will be looped depending on gameplay, which doesn’t include the intro.

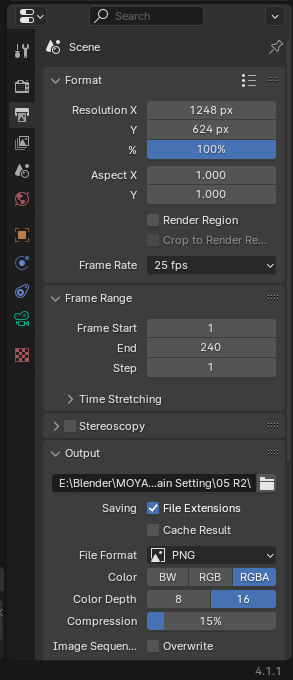

| INTRO | SegMent A | Segment b | Segment C |

| Always the same, only plays once. | Increased cutoff frequency for the bass. | Replace instrumentation to more synthesized/metallic sounds, pumping effect. | Increased cutoff frequency for the bass. |

| – | Replace flutes with synthesized trumpets. | Change in the melody. | Replace flutes and violins with detuned synthesized sounds. |

Fig.1 Adaptive process for a first state of tension.

As the intro starts during an introductory dialogue, it is not possible to get to the enemies while it is playing, therefore there is no need to map changes to this initial part of the music. The other sections have defined changes that are designed to increase a state of alertness and a sense of danger.

Technical Implementation

Creating adaptive music for Mechanical God Saga requires audio middleware to connect the music to the game, reacting to triggers from the game engine. For this project, we will use FMOD, which can be integrated with RPG Maker, the game’s programming software. While there is no official connection between FMOD and RPG Maker, additional programming through JavaScript will be necessary to establish a functional link. Daniel Malhadas, the game’s creator and programmer, is currently researching and developing this connection.

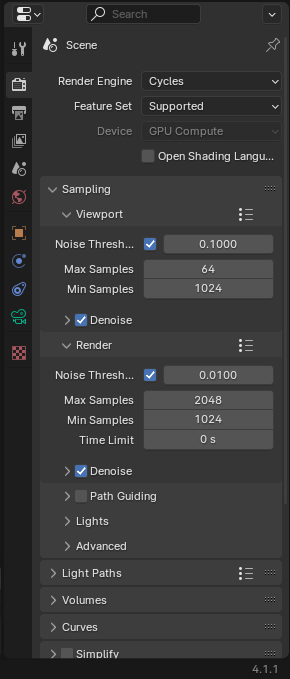

Within the adaptive system, there are branching and layering needs. As a starting point to understand these systems, the next figure shows a simple system designed for the first forest theme, as there is only one trigger happening, related to a state of tension. The red segments represent the altered music for the state of tension.

Fig. 2 Adaptive mapping system for the initial forest theme.

All transitions and overlay triggers need to be quantized to the BPM of the source cue and have meter and quantization grid setup. All starts and stops should have adjustable fade in and fade out lengths. This will ensure a smooth and quick transition between segments.

For the layering approach, instead of exporting individual layers, I exported each version as a full mix and will blend between them when a trigger occurs. This method addresses the need for continuous changes, such as increasing the bass filter’s cutoff frequency to achieve tension. Ideally, these adjustments would be controlled within the audio engine, but due to software limitations with the virtual instruments used, this is not possible. This approach offers a practical solution to those limitations. FMOD’s built-in parameters, such as equalization curves, pitch, reverb, and delay, will be used to create effects like dulling the music when the player is injured, enhancing immersion with equalization curves and possibly stereo field reduction.

As mentioned before, control knobs—either continuous or stepped— are planned to adjust the music based on the scene’s tension, the protagonist’s position, and the health or success rate during battles. If the game’s programmer can access this knob system, applying the necessary changes will be much easier, simplifying communication between the music and game sections.