The paper „Design Principles and User Interfaces of Erkki Kurenniemi’s Electronic Musical Instruments of the 1960’s and 1970’s“ by Mikko Ojanen et al., published at the NIME 2007 conference, offers a comprehensive examination of the innovative electronic musical instruments designed by Erkki Kurenniemi. These instruments, created in the 1960s and 1970s, were avant-garde for their time, significantly influencing the field of electronic music with their experimental approaches to user interfaces and interaction designs.

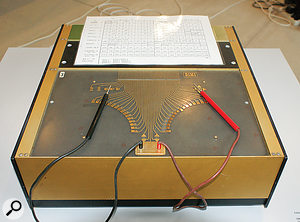

Kurenniemi’s instruments, like the Dimi series, utilized digital logic and unconventional interactive control methods that departed from traditional musical interfaces. His creations incorporated novel ideas such as real-time control, non-traditional input mechanisms (like biofeedback and video interfaces), and were designed with an experimental ethos that challenged conventional musical instrument design. This approach allowed for a unique artistic expression that mirrored the radical cultural shifts occurring during that era.

However, despite their innovative designs, Kurenniemi’s instruments faced challenges related to user accessibility and market acceptance. The complexity and unfamiliarity of the interfaces possibly hindered wider adoption and commercial success. This reflects a common tension in design between innovation and usability—groundbreaking ideas may not always align with user expectations or needs, which can impact their practical application and acceptance.

Critically, this paper not only documents historical innovations but also provides insights into the relationship between technological capabilities and artistic expression in electronic music. It emphasizes the importance of understanding user interaction in the design of new musical instruments and the potential barriers innovators may face when their creations precede current technological and cultural readiness.

Overall, the paper serves as a valuable resource for understanding the evolution of electronic musical instruments and offers critical lessons on the impact of user interface design on the adoption of new technologies in art. It’s a recommended read for those interested in the intersection of music technology, design, and user experience.