Im/material theatre spaces

In my last post, I presented a work from the project Im/material theatre spaces, which offers a potential answer to my question about a digital construction rehearsal.

As the project m/material theatre spaces encompasses further works that delve into digital aspects, particularly the theme of virtual and augmented reality in the theater environment, I would like to discuss additional projects as they can serve as inspiration for my own thoughts.

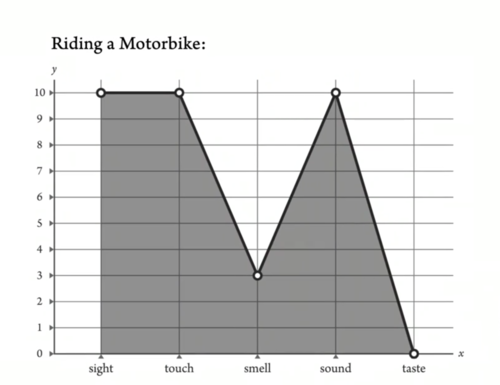

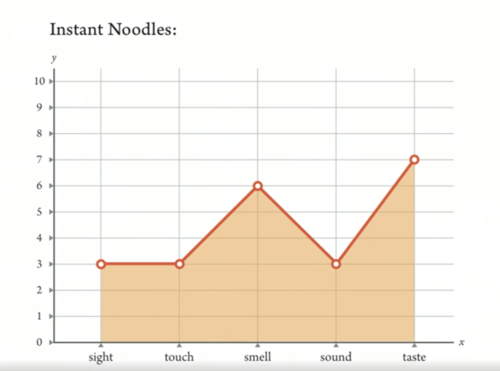

This research project explores the synergy between immersive technologies and centuries-old theater knowledge. They posit that theater and virtual/augmented reality (VR/AR) share spatial immersion and methods, addressing questions of participation and changing perspectives. VR, through complete immersion, opens up new storytelling possibilities, allowing shifts in perspective and embodiment of different roles. On the other hand, AR enriches reality by overlaying it with digital content, creating a fusion between the real and digital worlds.

Research Questions

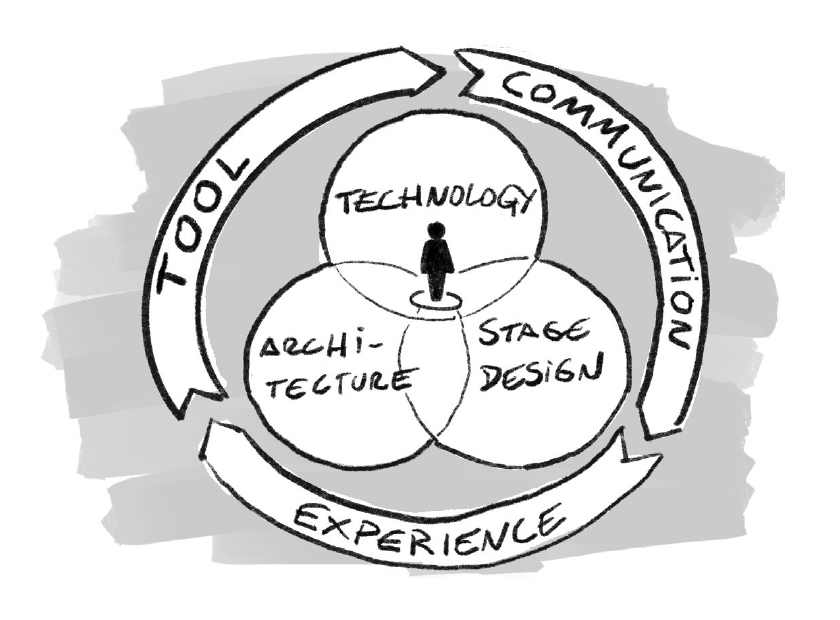

The project addresses key questions to unlock the potential of VR/AR in theater, exploring practical applications in architecture, stage design, and theater technology. Specific inquiries include the use of augmented reality in planning theater renovations, improving safety standards backstage with digital technologies, and employing immersive technologies to provide innovative access to cultural heritage.

Goals

The overarching goal of the research project is to establish theaters and event venues as ongoing hubs of technical innovation. By investigating the intersection of analogue and digital worlds, the project aims to make these new technical spaces usable for theater practitioners. The focus lies on developing prototypical solutions, communicating findings to the theater landscape, and fostering a sustained dialogue through workshops, lectures, and blog posts. The publication serves as a comprehensive overview of the project’s results, methods, and an exploration of potential future developments in the theater and cultural landscape.

Project: Digital Mounting Systems

Background:

The project addresses the lack of knowledge for assembling and dismantling complex equipment in the events industry. Not everything can be adequately conveyed through training, and many assembly and operating instructions are often impractical or too vague in paper form. To ensure safety during construction, this project aims to develop digital support.

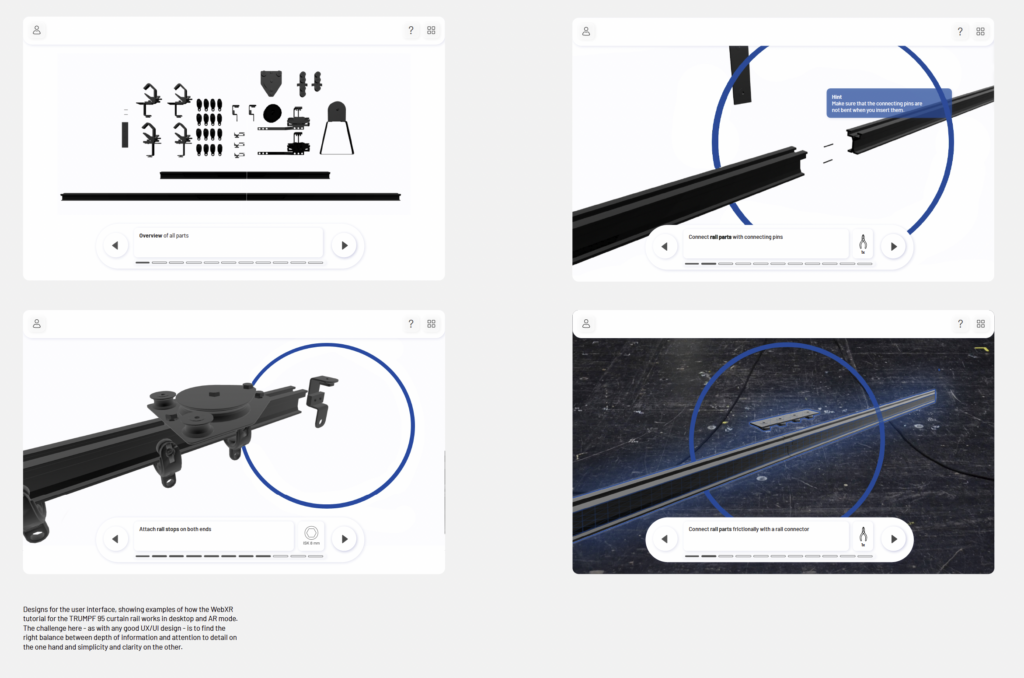

A functional prototype, specifically addressing the AR-supported setup of a curtain rail, has been developed, serving as a practical foundation for further discussions. Through an interactive website, assembly instructions are displayed in detailed steps, supported by 3D animations. The technology allows usage on conventional screens or immersively through Augmented Reality glasses or AR functions on smartphones. The website offers flexibility for future instruction updates without the need for end-device updates.

Feedback:

The digital assembly aid was generally deemed helpful, especially due to the detailed representation of complex steps. Realistic representation was considered necessary, particularly for quick component identification. Usage on a tablet or touchscreen was preferred, while Augmented Reality glasses were viewed as promising for the future. The desire for a personal account was expressed to customize existing instructions. The application could be used for notes and specific solutions within the house or for different productions. It was noted that the application could be useful for additional instructions and the visualization of theater projects. A technical obstacle lies in providing and maintaining high-quality 3D data.

Additional Areas for Digitization in Internal Processes:

- Inventory control systems

- Calculation tools

- Warehousing

- CRM systems for customer service

How could the project be continued, and what future applications could arise from the initial prototypes? Based on project feedback, the development of an individualized and fully automated creation of stage mounting systems could be pursued. A website could be created, allowing free configuration of a modular rail system. The individualized system could then serve as the basis for the automatic generation of precise assembly instructions.

Ressources

DTHG: Abschluss-Publikation des Forschungsprojektes „Im/material Theatre Spaces“