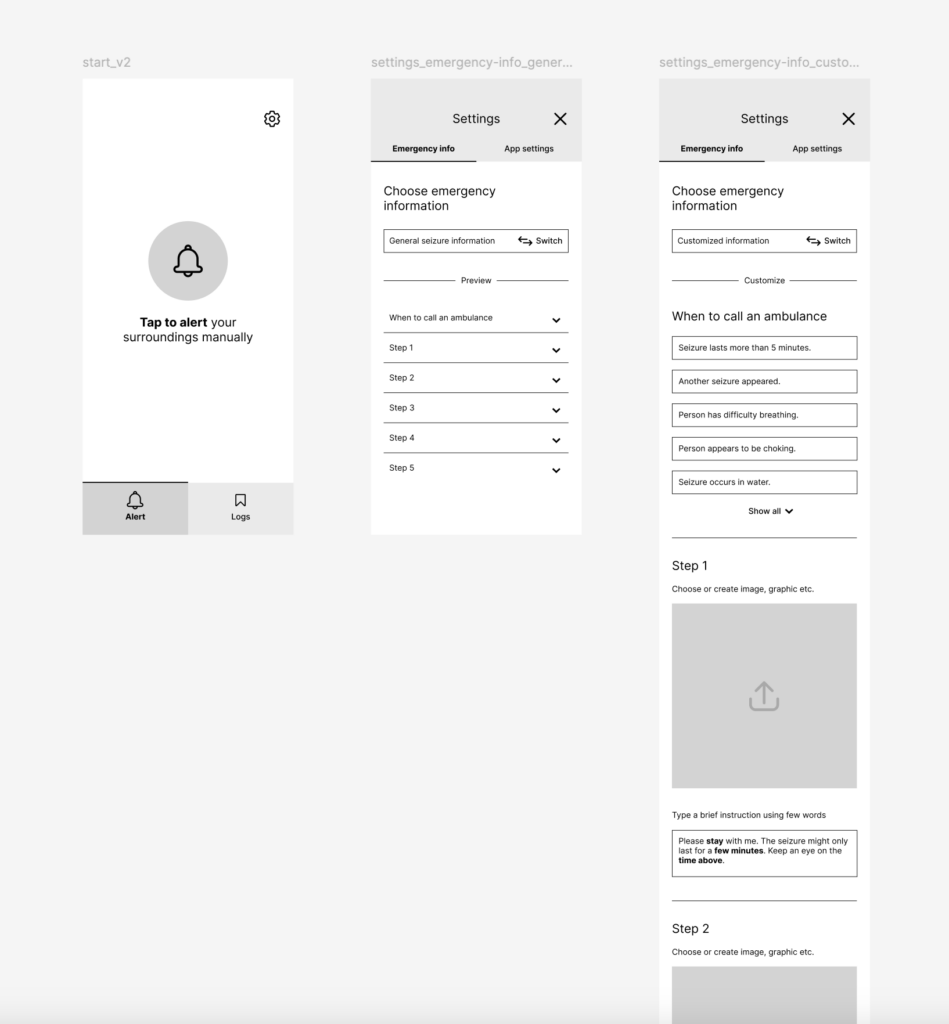

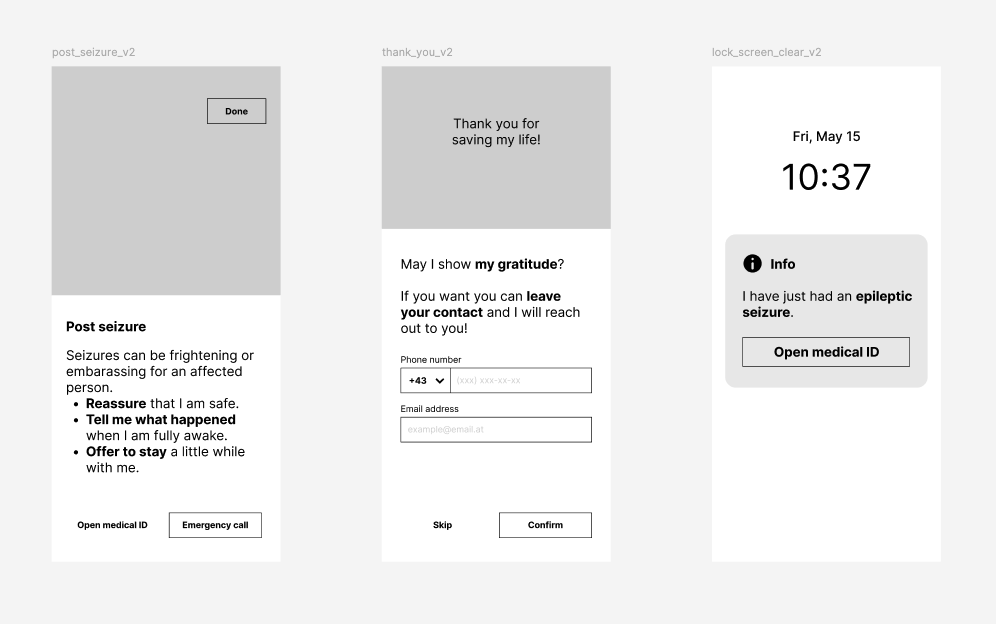

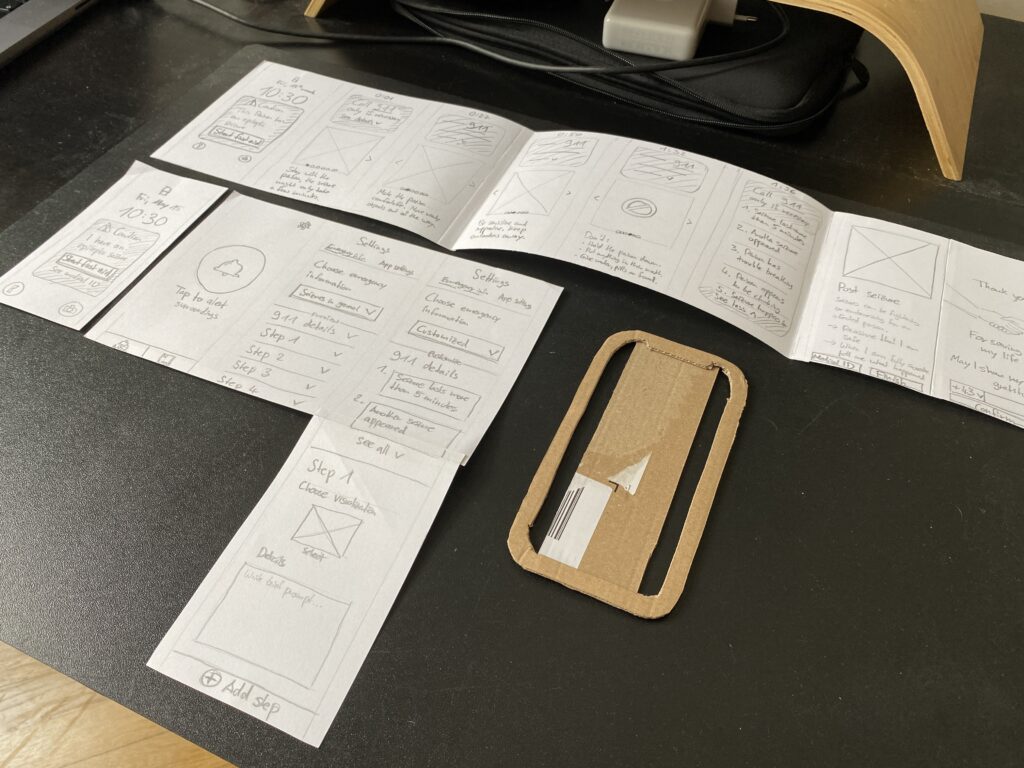

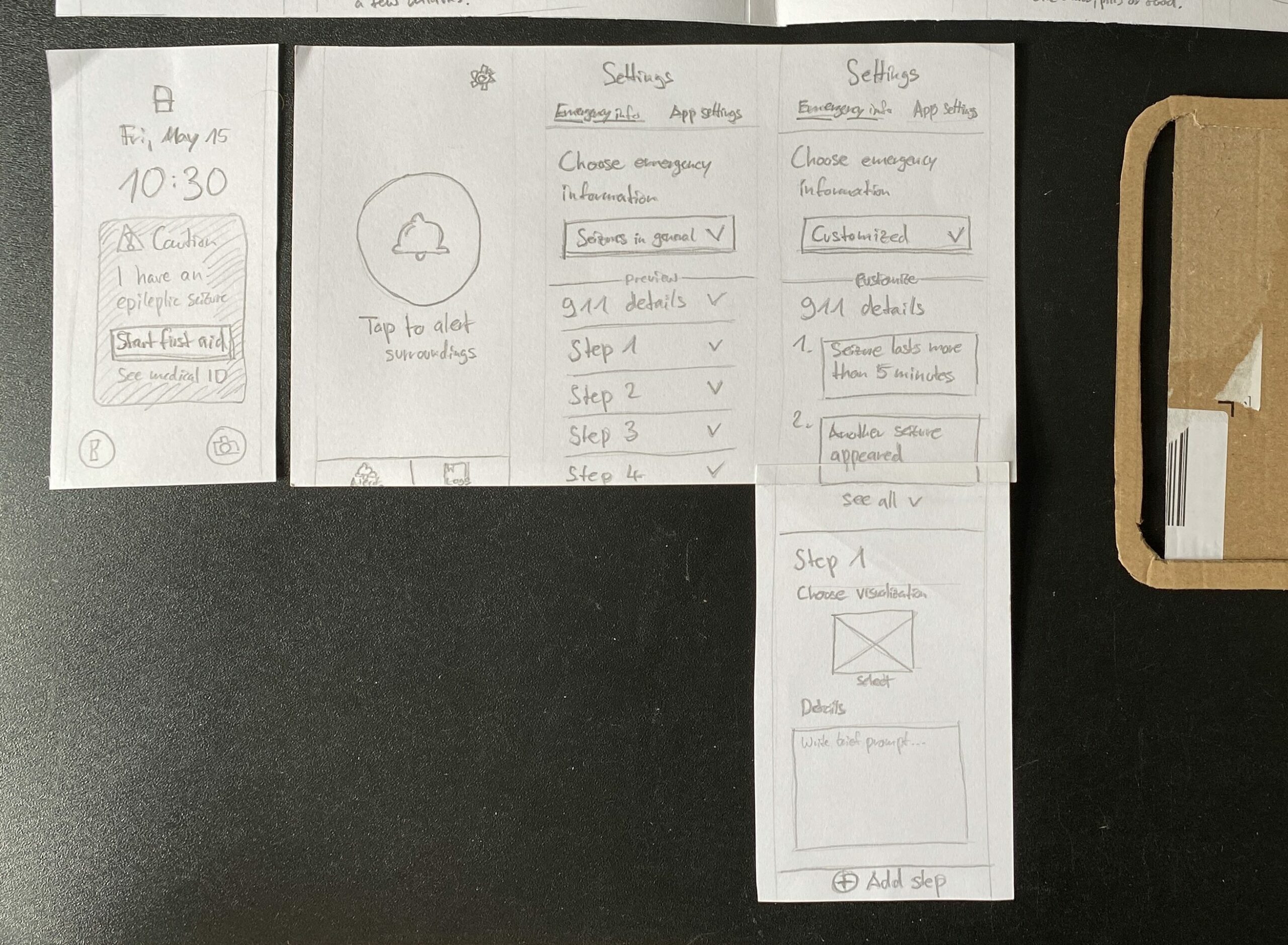

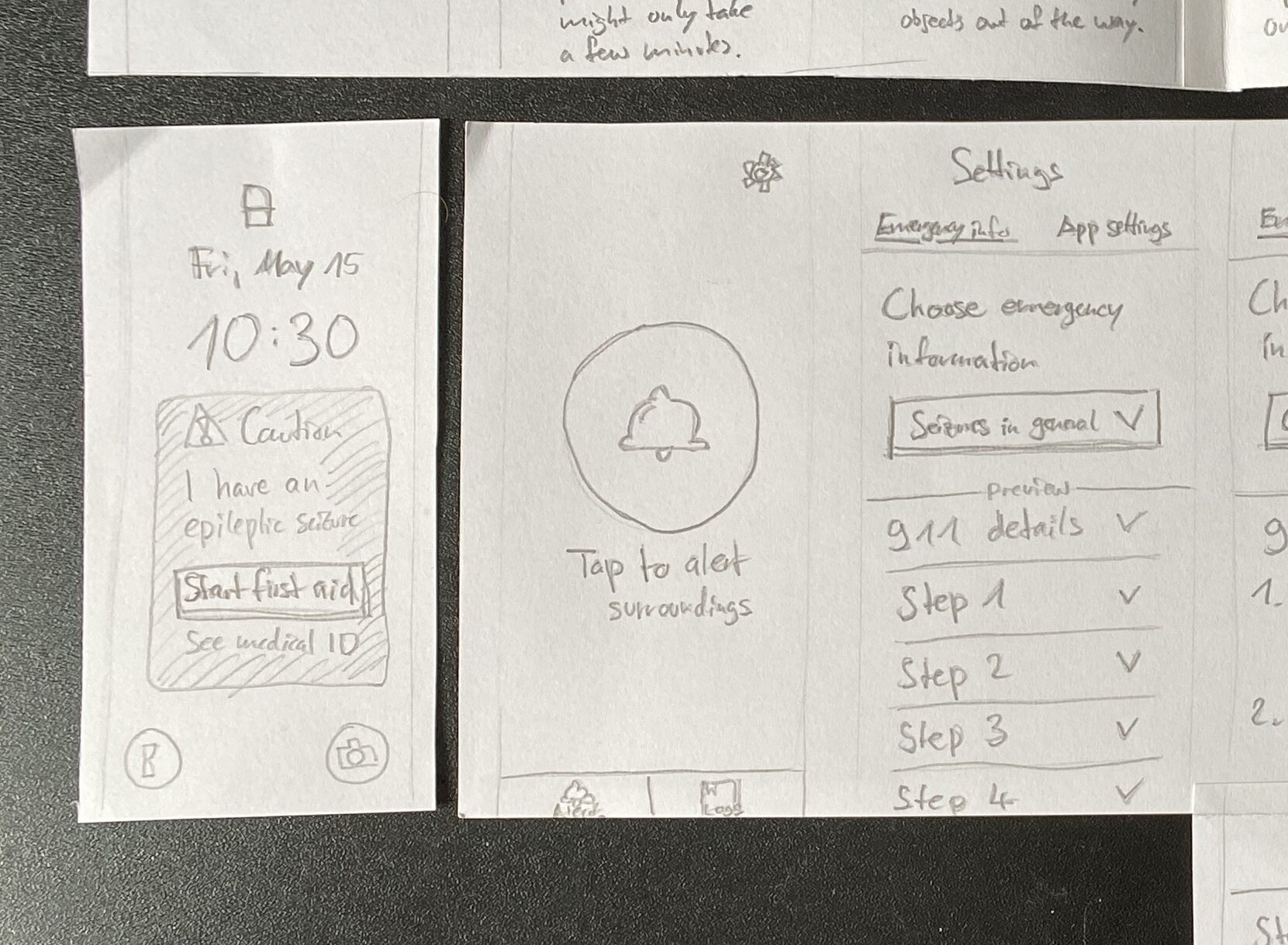

At the end of this semester I would like to give a short demonstration of how my prototype works. Therefore I created a short video that shows the functionality of the prototype.

Reflections

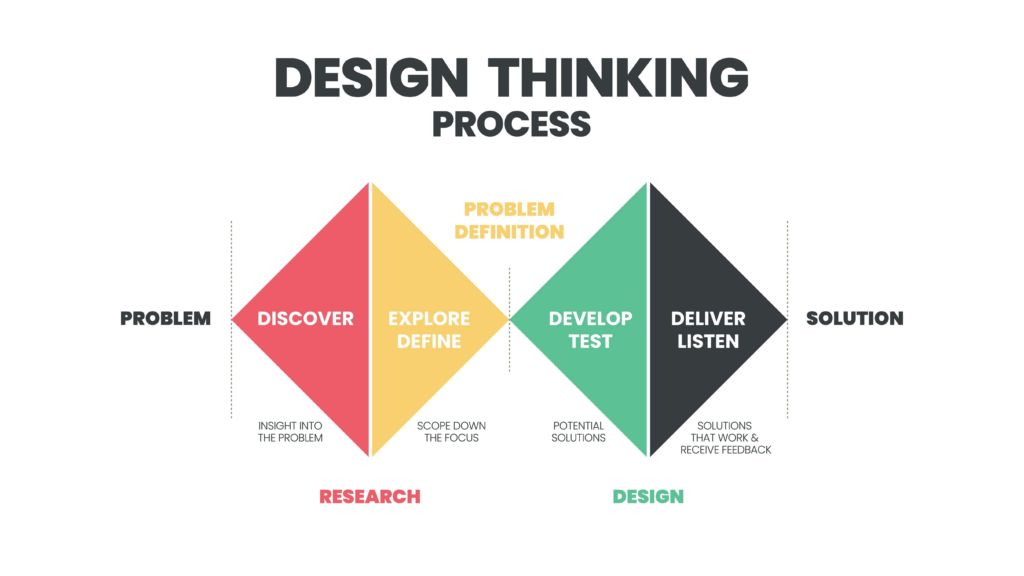

All in all, I enjoyed the process of Design & Research this semester. This time the work was more hands-on, consolidating my research from the first semester into a rough prototype. I was able to overcome my initial doubts as to how I could make a valuable contribution to my chosen topic, as there are already existing solutions. The potential I saw in my idea was confirmed by the feedback interview I conducted with the Institut für Epilepsie in Graz.

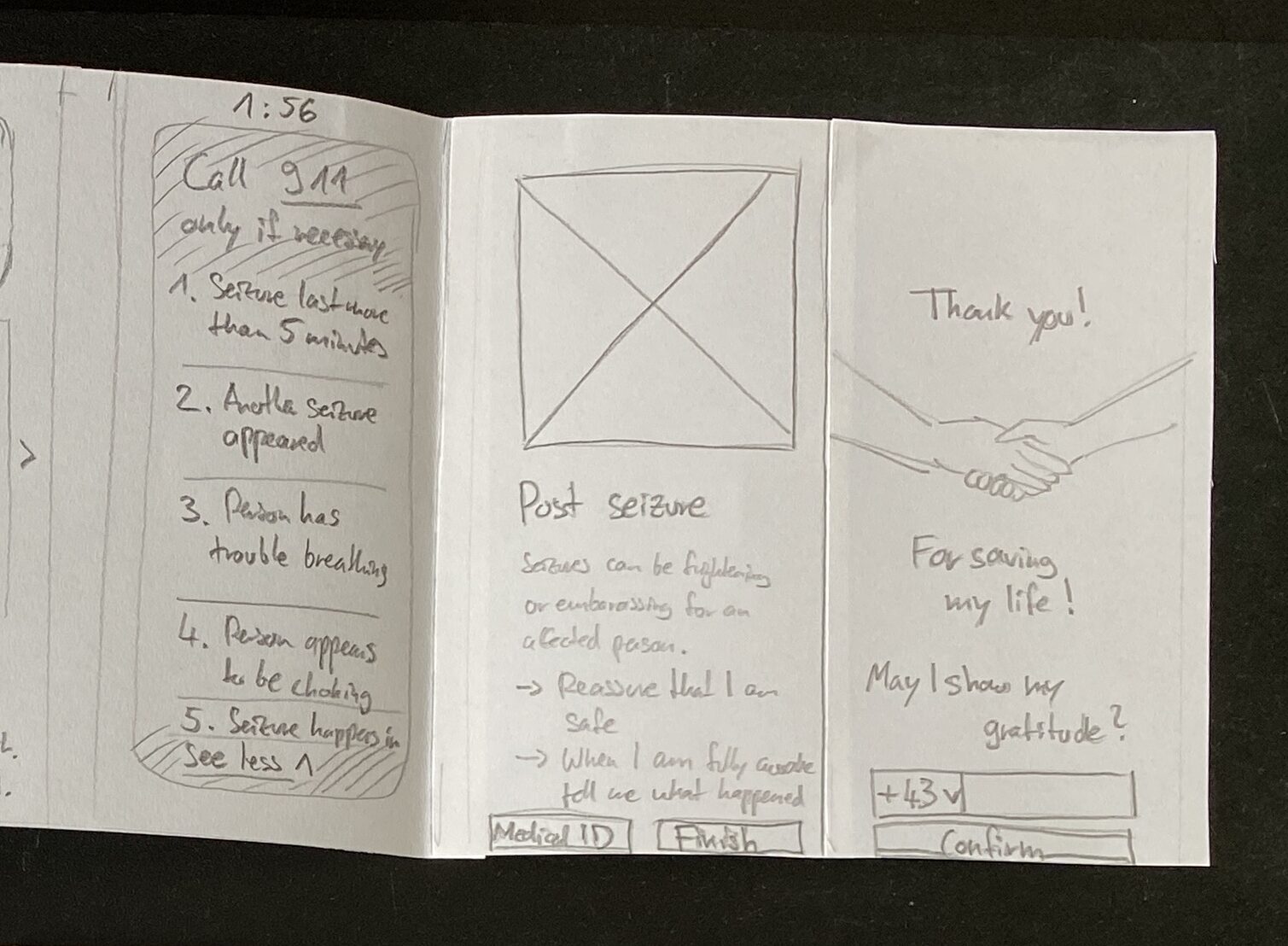

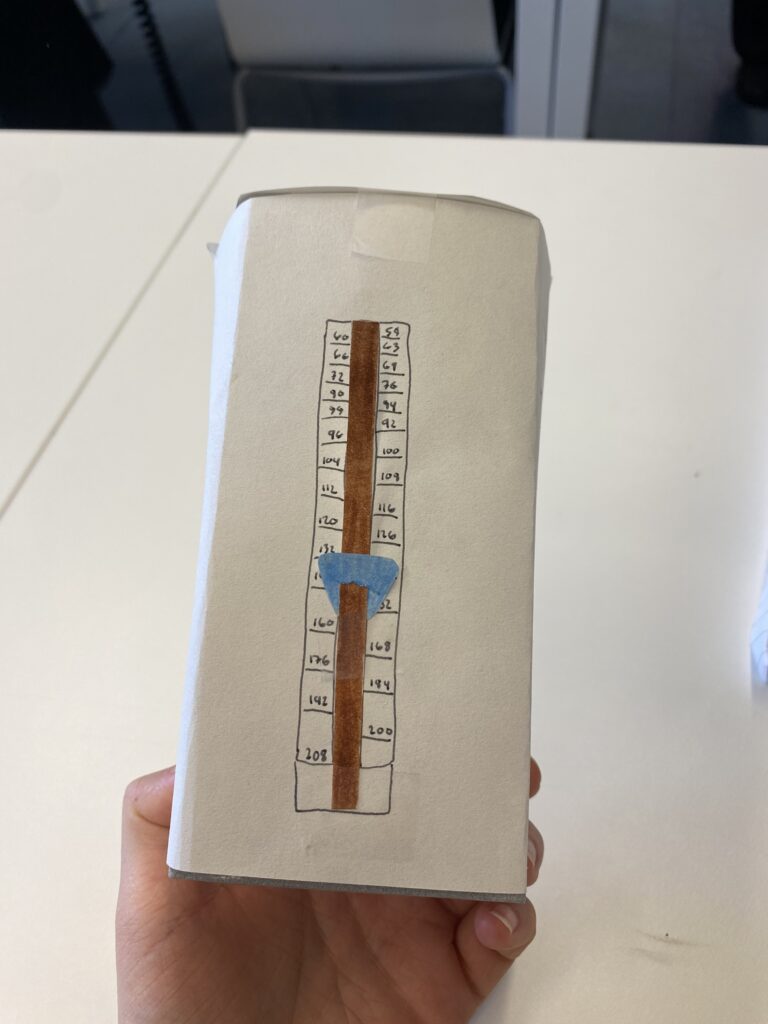

As one can see, this prototype is at a very early stage. It needs to be refined based on future feedback, in it’s interaction logic and real content, as well as in the sound and visual design to address emotional perception as well. This prototype could be an test object in evaluation practices such as expert reviews, interviews and tests to further develop this concept.

Resources

Freesound.org. Downtown Vancouver BC Canada by theblockofsound235. Retrieved June 26, 2024, from https://freesound.org/people/theblockofsound235/sounds/350951/

Freesound.org. VIZZINI_Romain_2018_2019_Emergency alarm by univ_lyon3. Retrieved June 26, 2024, from https://freesound.org/people/univ_lyon3/sounds/443074/

Freesound.org. Busy cafeteria environment in university – Ambient by lastraindrop. Retrieved June 26, 2024, from https://freesound.org/people/lastraindrop/sounds/717748/