In the beginning of our third semester, we as Interaction Design students once again had the privilege to attend the main conference of the World Usability Congress 2024, held on October 16th and 17th at the Stadthalle Graz. This event provided us with an excellent opportunity to deepen our understanding of usability and accessibility, as well as to draw inspiration from industry experts. The two days were packed with enlightening keynotes and interactive workshops, covering a wide range of topics central to the field of user experience design.

For my part, I primarily chose to attend sessions focused on accessibility, a subject that has always held particular significance to me. Among the various presentations, one talk stood out the most: „Websites and Apps for Everybody“ by Mari-Ell Mets, the Head of Accessibility at Trinidad Wiseman. Mets’ speech left a profound impression on me due to its relevance, practical insights, and passionate advocacy for inclusion in digital design.

Key insights from Mari-Ell Mets‘ talk

Mets began her presentation by emphasizing that accessibility is a cornerstone of high-quality web design. She supported her point with a striking statistic: every fourth European is classified as a person with special needs. This highlights the sheer scale of users who face disadvantages when websites and apps fail to meet accessibility standards. Mets further outlined key European regulations governing digital accessibility, including:

- EU Directive 2016/2102 on the accessibility of websites and mobile applications of public sector bodies,

- EU Directive 2019/882 on accessibility requirements for products and services, and

- EN 301 549, the European standard on accessibility requirements for ICT products and services.

These legal frameworks underline the necessity for designers and developers to prioritize accessibility. However, it was Mets’ practical advice that truly resonated with me. She shared 10 accessibility rules that, when applied, can resolve 80% of common usability issues in websites and apps. The simplicity and effectiveness of these rules made them particularly impactful.

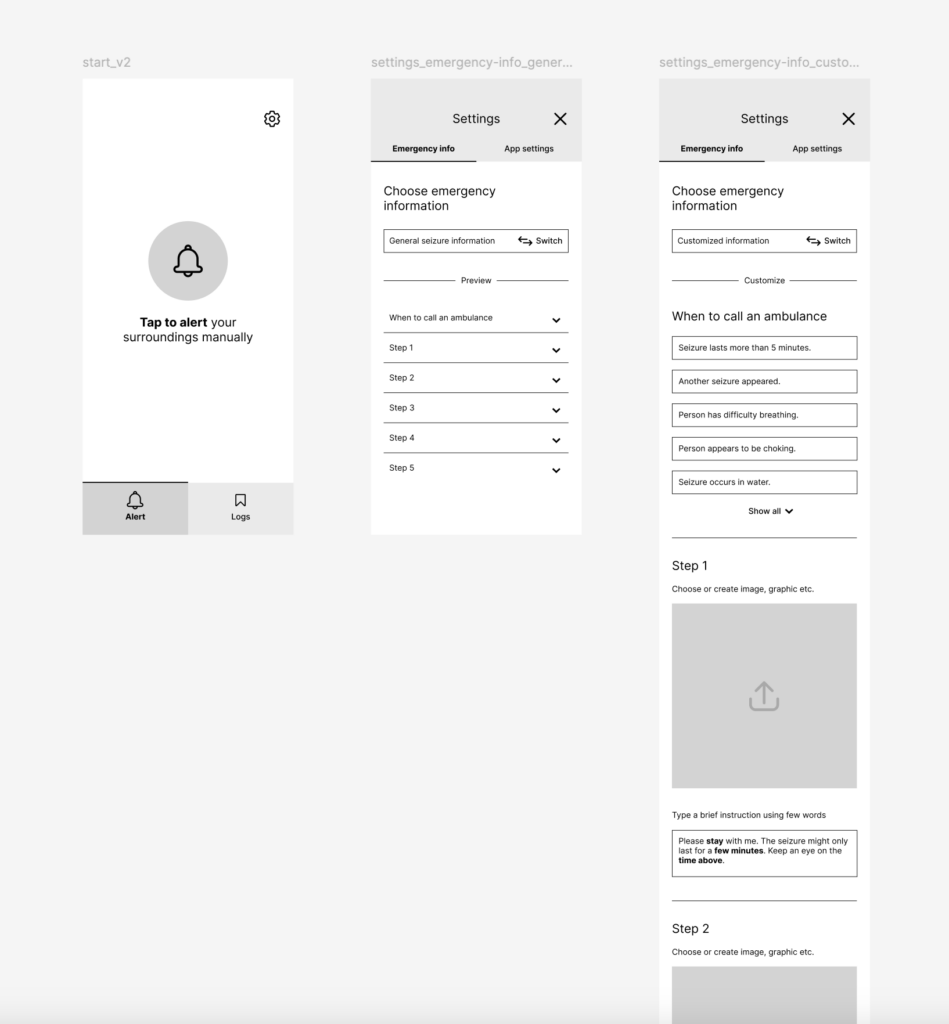

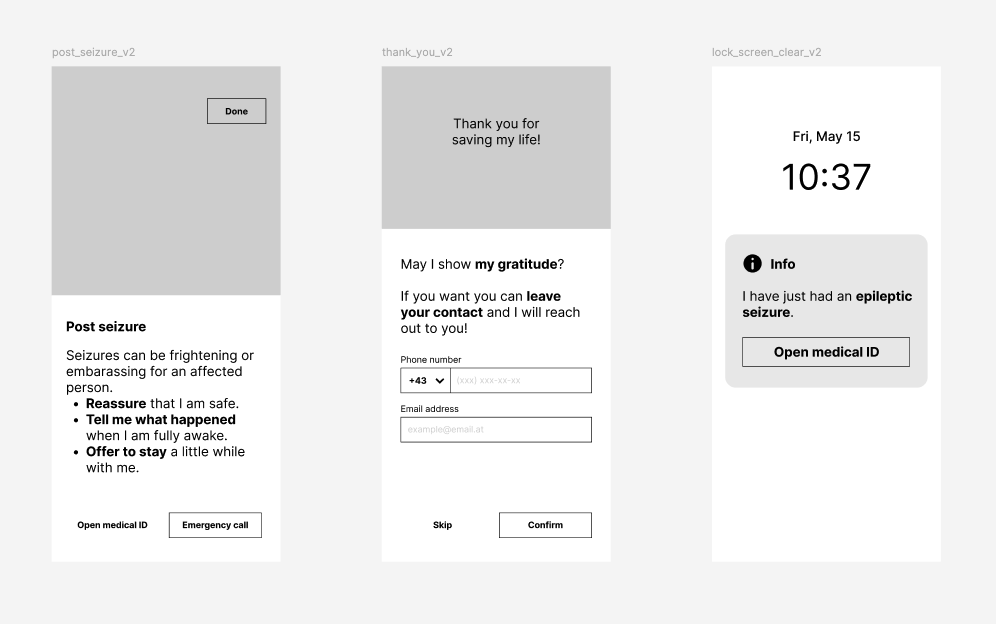

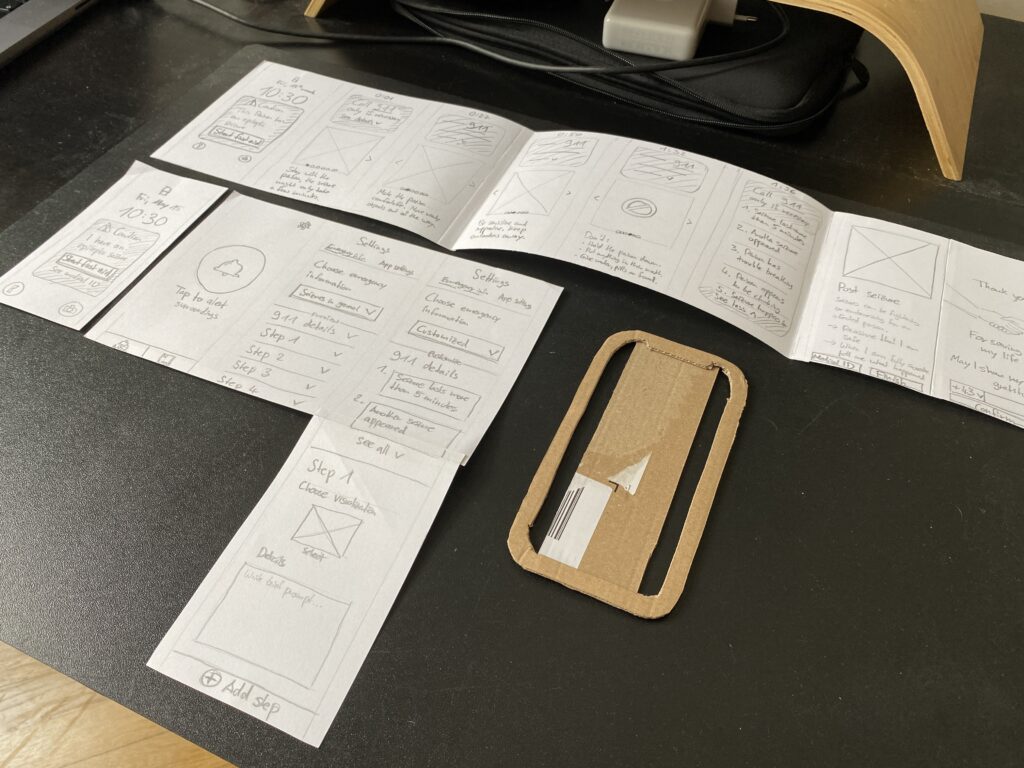

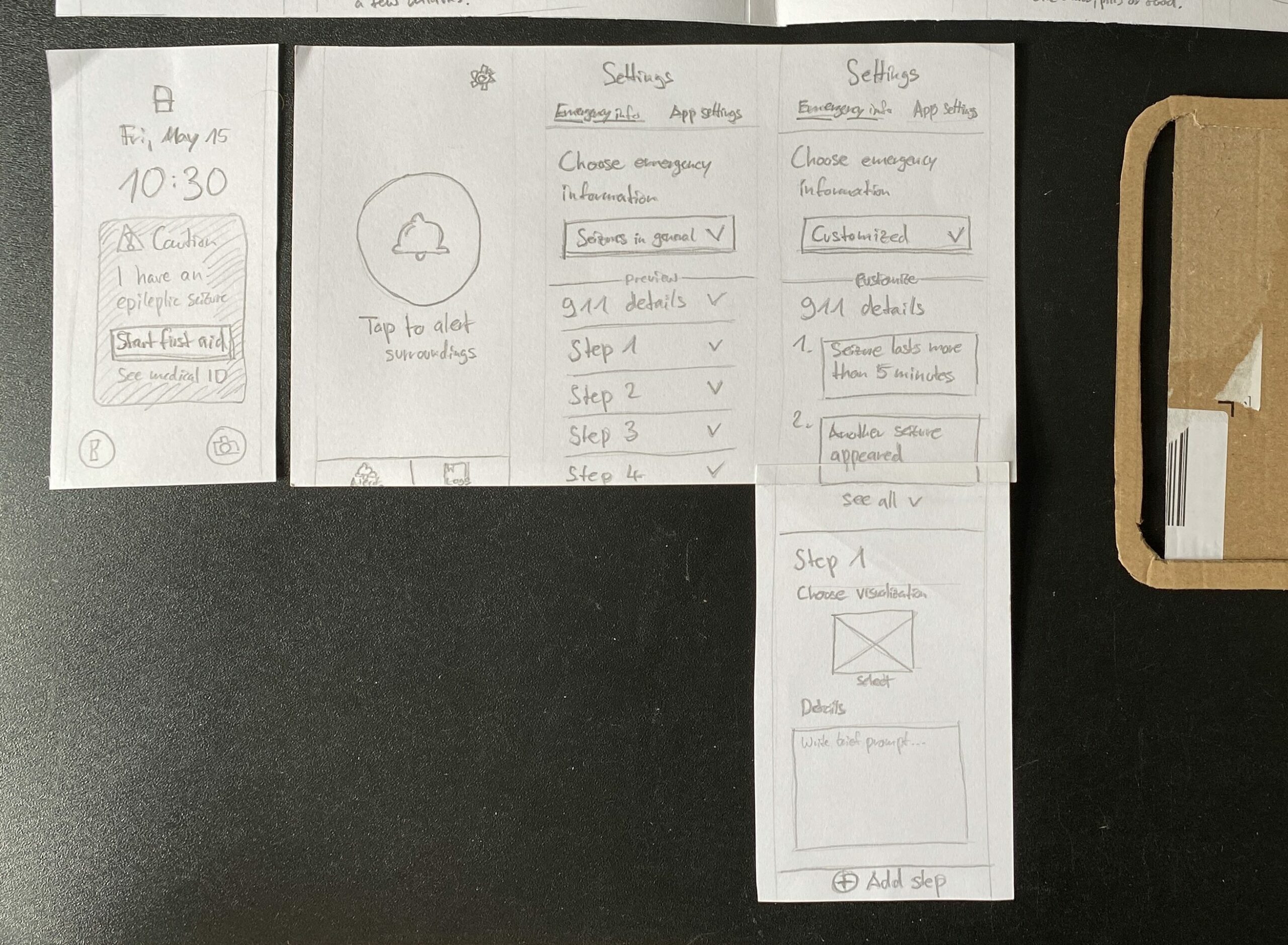

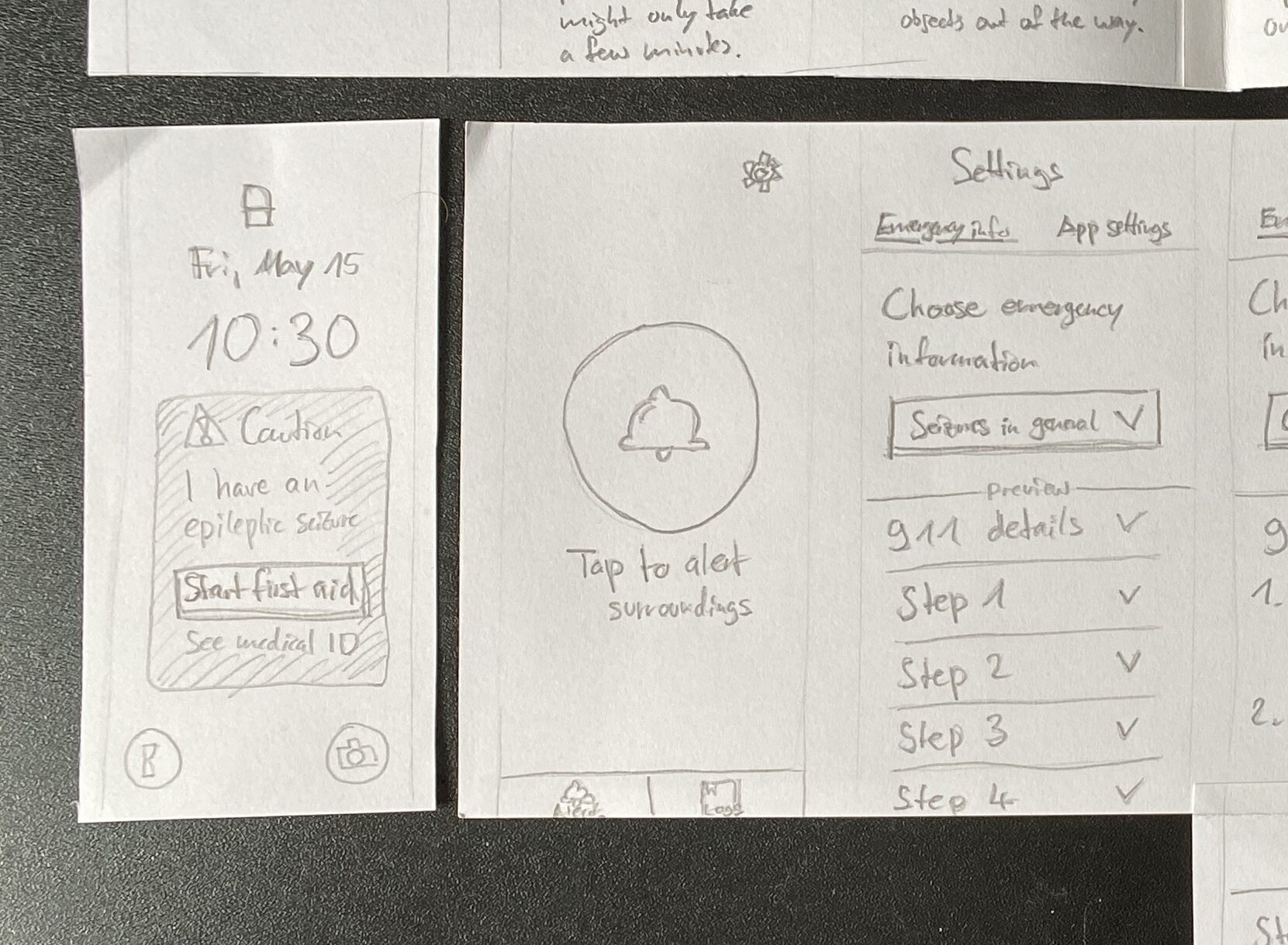

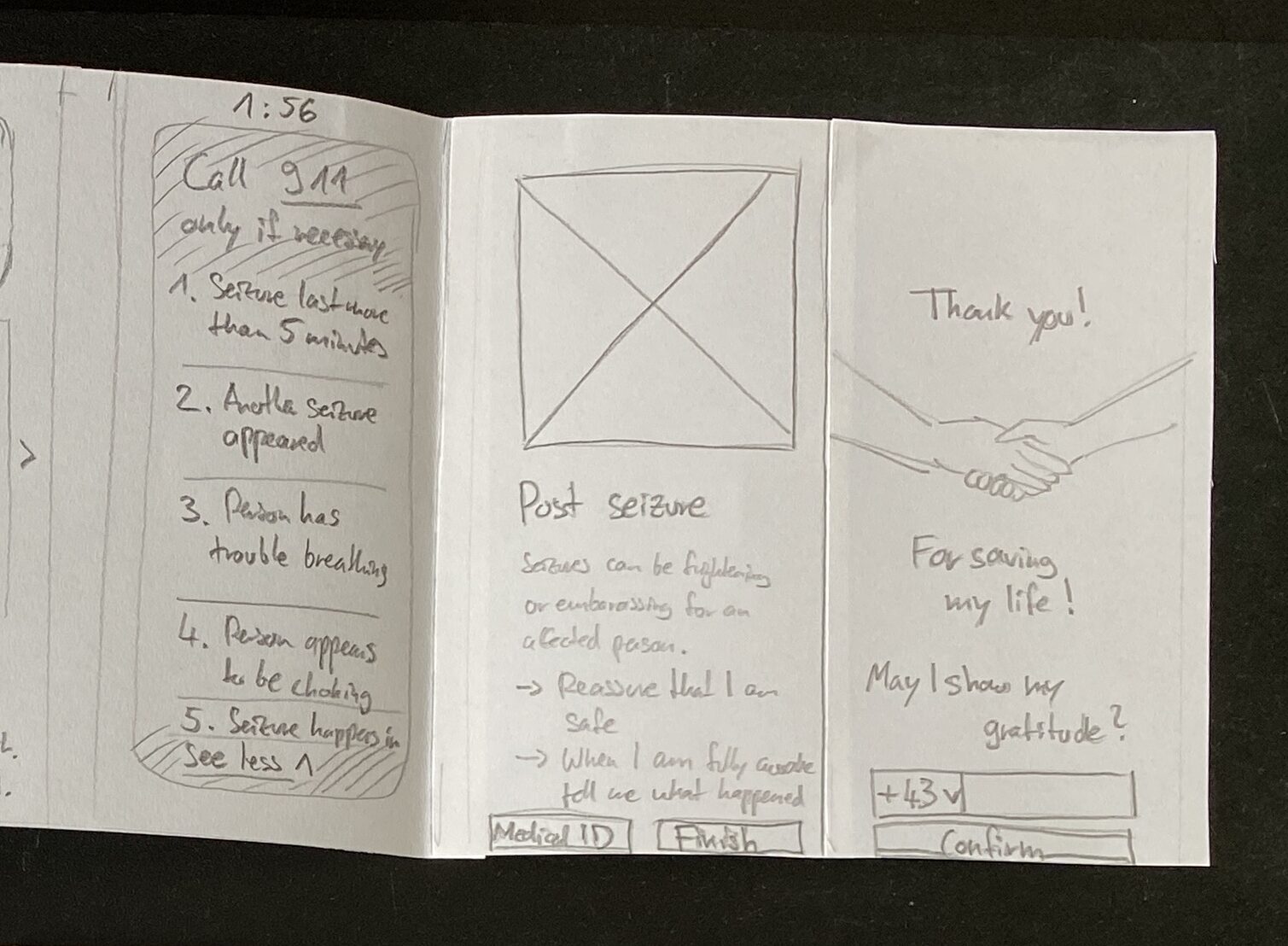

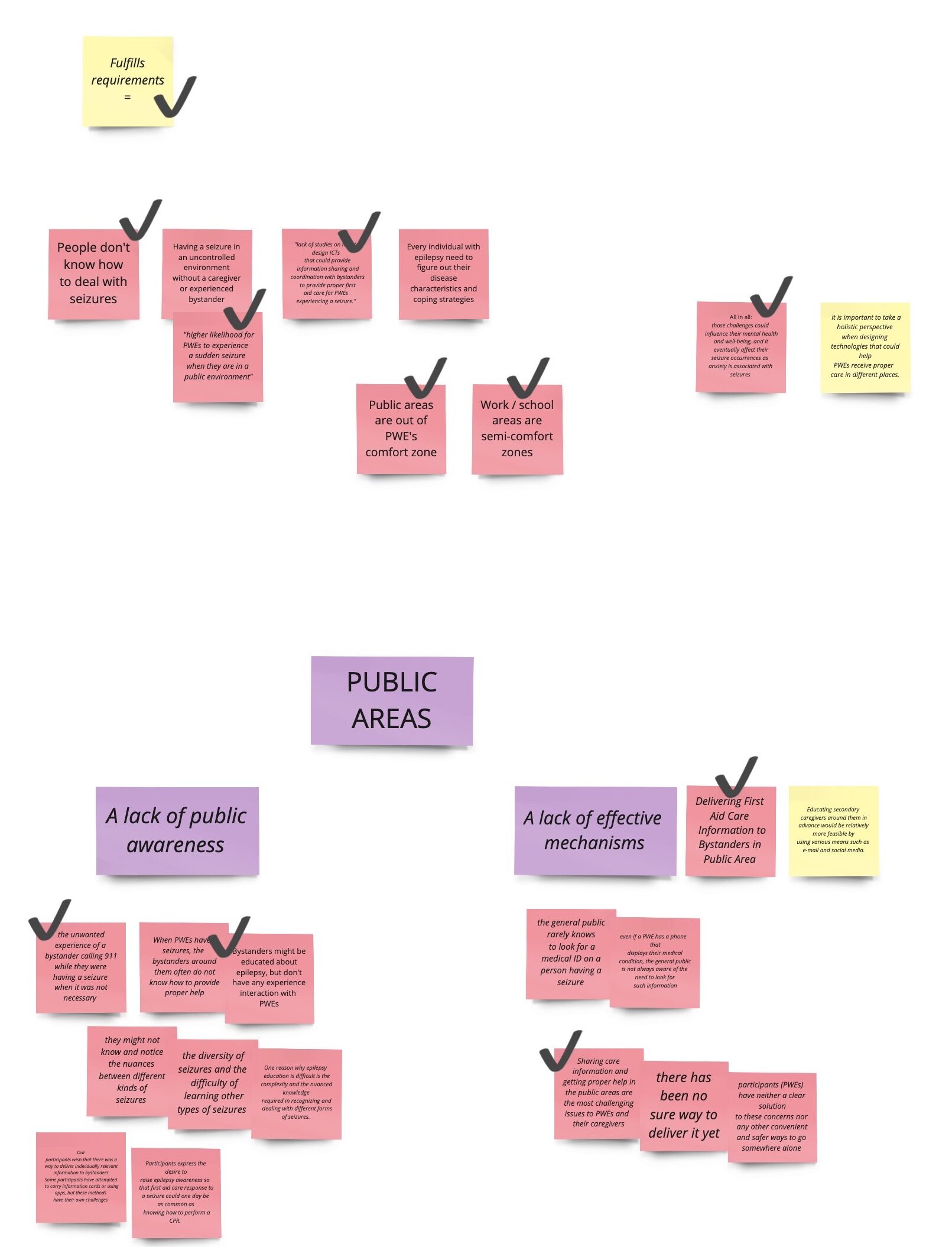

Applying accessibility principles to my prototype

Mets‘ accessibility guidelines felt directly applicable to my ongoing project, which I developed as part of the Design & Research module at FH JOANNEUM. Over the last two semesters, I have been working on a mobile app concept aimed at assisting untrained first aiders in public spaces. The app provides step-by-step instructions on how to secure and help a person experiencing an epileptic seizure. Given that first aiders can be anyone in a public area, my app must cater to a diverse user base, including those with special needs. Mets‘ principles offered a concrete framework to refine my design.

No moving content

One of Mets‘ rules highlights the importance of avoiding autoplaying content, such as sounds, animations, or videos. If moving content is used, it should serve a clear purpose, and users must be able to pause it.

For my app, this means ensuring that emergency steps and instructions are presented clearly and with minimal motion. Movement can serve as a helpful explanatory tool, such as an animation showing the recovery position, but it should not overwhelm users or cause distractions. To address this, I plan to: Justify the use of movement in each case to ensure it enhances comprehension. Keep animations subtle and purposeful to reduce cognitive load, especially for sensitive users. Include an easily accessible pause button for any moving content.

Contrasted color

Color contrast plays a pivotal role in ensuring text readability and emphasizing interactive elements. Mets warned against placing text on images, as this can reduce contrast and make text difficult to read. She recommended using contrast-checking tools to ensure compliance with accessibility standards.

As my prototype progresses to a high-fidelity design, I will focus on selecting appropriate color schemes that enhance usability. Given the app’s life-saving nature, its design must remain minimalistic and user-friendly. High-contrast color combinations will ensure that all users, including those with visual impairments, can easily read text and identify critical elements like buttons and icons.

Clear error messages

Error messages are another critical aspect of accessibility. Mets stressed that they should be specific, clearly indicating what went wrong and offering solutions. For example, errors should have precise labels, point to the problematic area, and be compatible with screen readers.

In my app, this principle will guide the design of features like the medical ID form and emergency call options. If an error occurs—such as a failure to submit an emergency form—the user should receive an immediate and clear explanation with steps to resolve the issue. Additionally, I plan to implement screen-reader compatibility for error notifications, ensuring that users with disabilities are adequately informed.

Broader implications for design

Mets’ talk served as a timely reminder that accessibility is not a niche concern but a universal requirement. It goes beyond catering to individuals with disabilities and improves the overall user experience for everyone. Features like clear navigation, sufficient contrast, and error notifications benefit all users, regardless of their abilities.

Reflecting on her presentation, I was reminded that accessibility isn’t just about meeting regulations—it’s about embracing an inclusive mindset. By ensuring that websites and apps are accessible, designers actively contribute to breaking down barriers and creating a more equitable digital landscape.

Conclusion

Attending the World Usability Congress 2024 was an inspiring and educational experience, particularly Mari-Ell Mets’ session on accessibility. Her practical advice directly applies to my work, offering valuable insights to improve my app prototype. By implementing Mets’ accessibility rules, I can ensure that my app is not only functional but also inclusive and user-centered.

In a world where digital experiences are increasingly integral to our daily lives, designing for accessibility is no longer optional—it is essential. Mets’ presentation reaffirmed my commitment to creating designs that are not only innovative but also meaningful and inclusive. This learning experience will undoubtedly have a lasting impact on my approach to design.

Resources

World Usability Congress. „Agenda 2024.“ Accessed November 5, 2024. https://worldusabilitycongress.com/agenda-2024/?agenda=83CALT.

European Union. Directive (EU) 2016/2102 of the European Parliament and of the Council of 26 October 2016 on the Accessibility of the Websites and Mobile Applications of Public Sector Bodies. Accessed November 5, 2024. https://eur-lex.europa.eu/eli/dir/2016/2102/oj.

European Union. Directive (EU) 2019/882 of the European Parliament and of the Council of 17 April 2019 on the Accessibility Requirements for Products and Services. Accessed November 5, 2024. https://eur-lex.europa.eu/eli/dir/2019/882/oj.