In the Beginning of the Semester, I watched Blade Runner, the iconic sci-fi film that paints a vivid picture of a future where technology and humanity are deeply intertwined. Beyond its gripping story and stunning visuals, the movie left me with a lot to think about—especially how its portrayal of holograms and augmented reality (AR) mirrors the possibilities and pitfalls of our own technological advancements.

Holograms and AR: A Glimpse into the Future

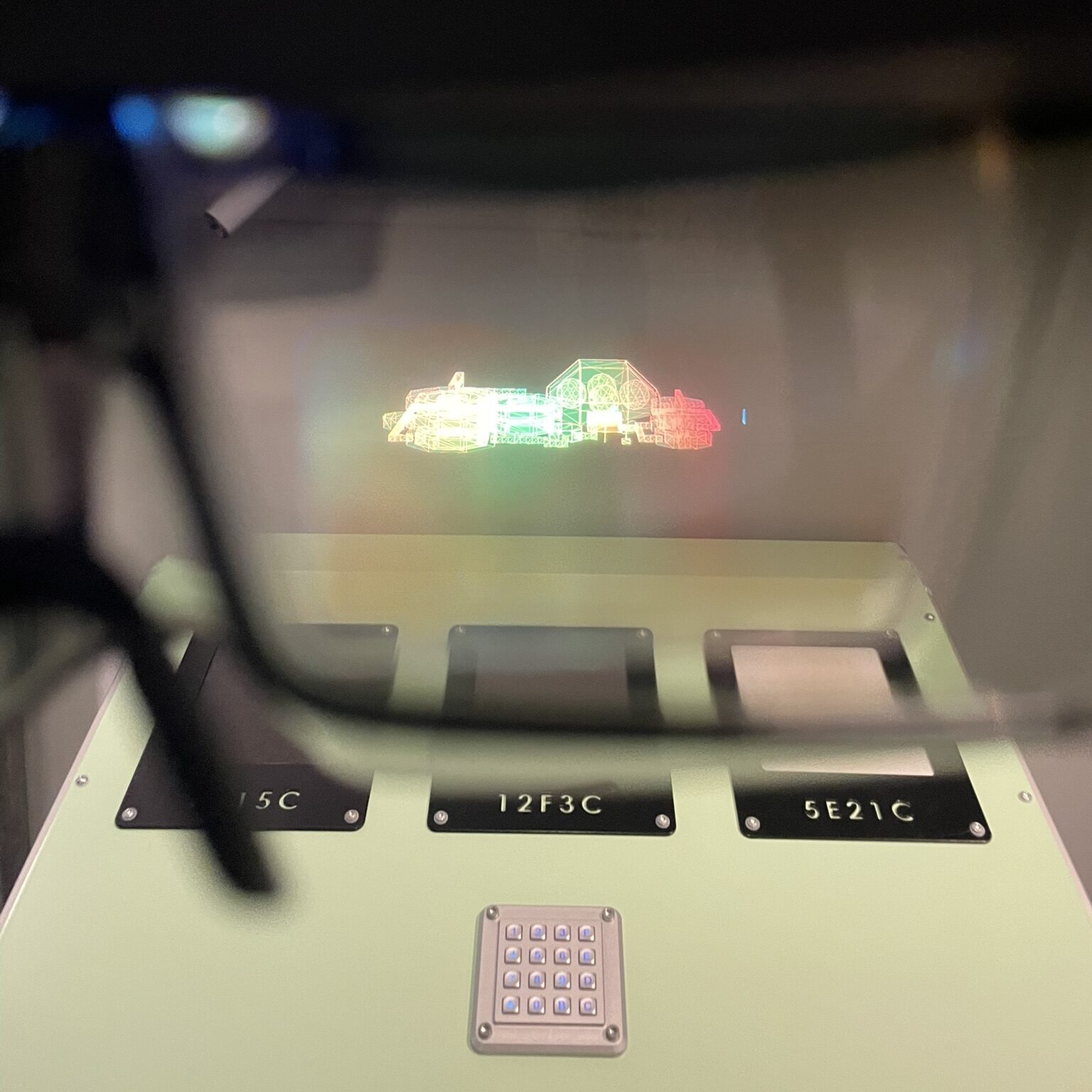

One of the most striking elements of Blade Runner is its use of holograms. From the giant, shimmering advertisements to the intimate, lifelike projections, these holograms feel like a natural part of the world. But what’s fascinating is how close this vision is to becoming reality—not through actual holograms, but through AR glasses.

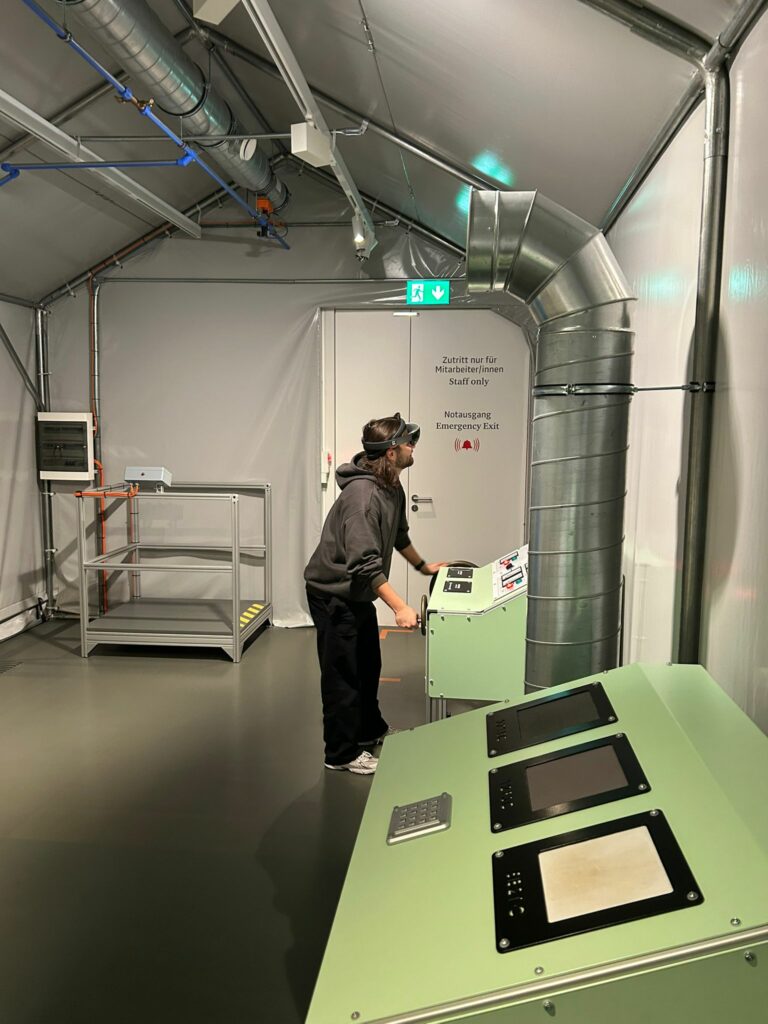

In the film, the holograms aren’t physically present; they’re projections that only certain characters can see. This is exactly how AR works today. With AR glasses, digital elements are overlaid onto the real world, visible only to the wearer. It’s a subtle but powerful way to blend the physical and digital, much like the holograms in Blade Runner.

This got me thinking: what if AR glasses became as ubiquitous as smartphones? We could have personalized ads floating in the air, virtual assistants walking beside us, or even holographic companions. The potential is exciting, but it also raises questions about privacy, distraction, and the line between reality and illusion.

The Dystopian Side of Progress

Blade Runner doesn’t just showcase the wonders of technology—it also highlights its darker side. The film’s world is a dystopia where technological advances have led to environmental decay, social inequality, and a loss of humanity. The holograms, while mesmerizing, are part of a society that’s become overly reliant on technology, to the point where it’s hard to tell what’s real and what’s artificial.

This dystopian vision resonates with some of the concerns surrounding AR and other immersive technologies. As we push further into technological innovation, we risk creating a world where digital overlays dominate our perception, blurring the boundaries between reality and simulation. What happens when we spend more time in augmented worlds than in the real one? How do we ensure that technology enhances our lives without eroding our connection to the physical world and each other?

Inspiration for the Future

Despite its cautionary tone, Blade Runner is also a source of inspiration. It shows us what’s possible when creativity and technology come together. The holograms, the neon-lit cityscapes, and the seamless integration of digital and physical elements are a testament to the power of imagination.

For me, the film is a reminder to approach AR and other immersive technologies with both excitement and caution. As designers and developers, we have the opportunity to shape how these technologies are used. We can create experiences that enhance reality without overwhelming it, that bring people together without isolating them, and that push boundaries without losing sight of what makes us human.