This blog post details my first proper implementation of a gamified exercise prototype. I will explain the steps I took and share my thought process along the way.

As mentioned before, there are multiple ways of providing (gamified) feedback to a user. I briefly want to talk about the differences between short-term immediate and long-term delayed feedback:

- Timing: Immediate feedback is instantaneous, while long-term feedback is delayed.

- Purpose: Immediate feedback enhances the act of exercising, while long-term feedback enhances the overall experience by providing a broader perspective on progress.

- Type of Feedback: Immediate feedback is typically corrective and directly related to the action performed, while long-term feedback is cumulative and provides a broader perspective on progress.

Choosing the Exercise and Sensors

When creating an actual prototype, I first looked at:

a) Which exercise I could replicate, and b) Which sensors were available to me.

Selecting the Exercise

I researched various sources and training programs to find exercises that could be easily enhanced digitally. I chose this specific exercise because it seemed easy to track, the resistance band allowed space to mount the phone, and it was a good exercise mentioned in different therapy programs.

Testing the Sensors

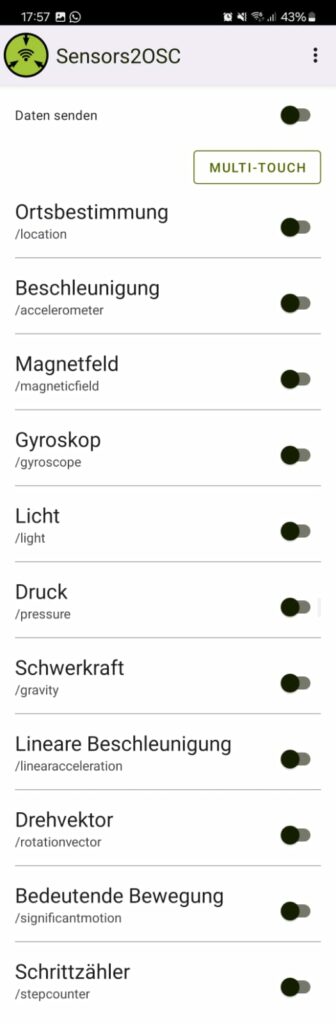

I explored various sensors available through Sensors2OSC. After testing, I found that the gyroscope, gravity, and accelerometer provided the clearest feedback when performing the exercise. I decided to use the gravity.z value as it gave the most reliable data.

Developing the Prototype

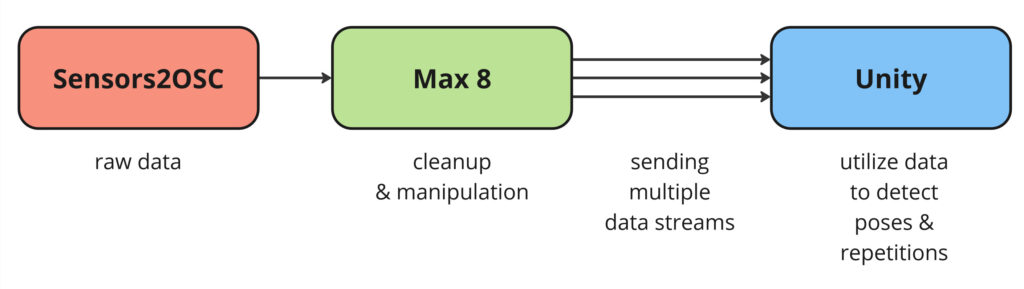

I initially wanted to send data to Unity as a Vector3 (using Keijiro’s OSCJack) but encountered issues with proper message transmission from Max 8 to Unity. I eventually decided to send the data as an integer.

Structuring the Code

Before starting the actual coding, I planned how to structure it. I modified my previous approach and decided to handle all game logic in Unity, using Max 8 only to receive and send data.

Updated flowchart for the data processing. Now there is a manipulation step in Max 8, and multiple values are sent to Unity for detecting repetitions and triggering actions/events accordingly.

I then modified my script and scene from last time. The UI now tracks repetitions and sets, showing the user when they have successfully performed the exercise. The code has grown significantly to handle these changes. It includes checks to recognize repetitions, locking mechanisms to ensure the count increases only once per repetition, UI updates, etc.

Coding was mostly a matter of planning and trial and error to figure out exact values. Interestingly, some planned functionalities, like setting a base value when pressing the spacebar, turned out unnecessary as the gravity data didn’t require it.

Lessons Learned

For this first prototype, handling all aspects (receiving data, triggering events, checking values, updating UI, etc.) in one script was manageable. However, for future iterations, separating the code into multiple classes and following the principle of data encapsulation from object-oriented programming would provide more structure. Additionally, establishing a naming convention beforehand could help avoid confusion in the future.

In the next blog post, I will show and discuss my findings and results from this prototype, along with a video demonstration.

Sources:

- https://www.semanticscholar.org/paper/A-Participatory-Design-Framework-for-the-of-Systems-Charles-McDonough/4149205989849ac7baf0fd4cd26843923aa192e9

- https://core.ac.uk/download/pdf/55533955.pdf Games & Feedback

- https://www.healthline.com/health/shoulder-mobility-exercises

- https://www.whyiexercise.com/physical-therapy-exercises.html

- https://thephysioshop.ca/blog/upper-body-stretches-for-better-flexibility-and-posture/

- https://www.hingehealth.com/resources/articles/arm-stretches/

- https://medi-dyne.com/blogs/exercises/upper-arm-pain-exercises

- https://www.whyiexercise.com/physical-therapy-exercises.html

- https://www.dunsboroughphysio.com.au/physio-exercises-upper-limb-flexibility.html

- https://thephysioshop.ca/blog/upper-body-stretches-for-better-flexibility-and-posture/

- https://www.hingehealth.com/resources/articles/arm-stretches/

- https://medi-dyne.com/blogs/exercises/upper-arm-pain-exercises