What happened so far?

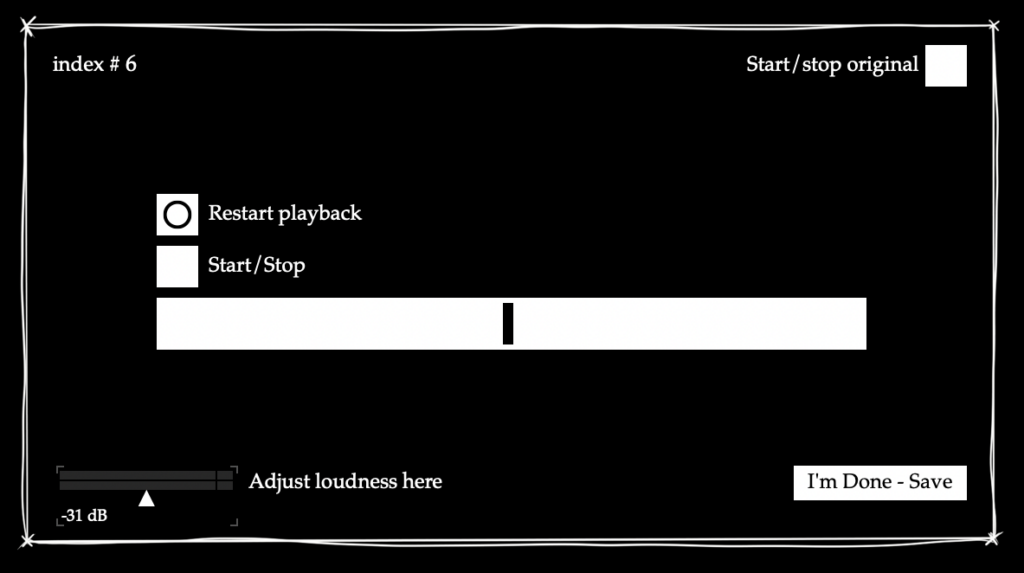

Recently, time spent working on the project was dedicated to figuring out how to best turn it into an exhibit that is both somewhat valuable for the user, as well as for research purposes. I knew that it would be important to keep the interface intuitive, and at the same time not to clutter it with information. Furthermore, a good solution was needed to collect parameter data – after some research and experiments I found that the coll object would work best for my purpose, with its ability to capture an index number and separate data input with commas, allowing me to then export the anonymous results as a CSV file. The save button and volume adjustments were non-negotiable, but I struggled a bit with how to best implement options to play back the source sound as well as the processed sound in a way that made sense just from looking at the interface. Another aspect I considered was that I would need a “phantom” slider for the visible interface for the user, meaning that after the previous person saves it always jumps to a random value, but looks as if the slider is back at the center. Like this, test subjects cannot copy the results from the previous person and really have to rely on their hearing to match the processed audio as closely as possible to the source sound.

Ongoing

During a supervisor meeting, we tried think of a way to improve the playback situation – ideally three buttons at the centre of the screen would be enough. One option would be to have the playback of the original sound be gated, so that whenever it stops playing, the processed sound starts automatically. It is definitely something that still needs more thought and a better practical solution.

Results and Reflection

That this part of the project will be shown to the public definitely added a new challenge, because now it is not just about whether the software makes sense to me, but also whether it can be translated to a first-time user with little to no experience. The idea of people using their hearing to adjust the parameter in a sort of audioscope-like manner is very interesting to me though, and I look forward to seeing the results – I wonder how accurate the resolution of the parameter has to be for people to not notice a significant difference anymore, and how much it varies between people.

Objectives for Next Time

- Finalise exhibit version (software)

- Figure out physical exhibition setup

- Write guideline how to set up/turn the exhibit off and on for the showcase supervisors