So the audio programming process basically took the entire semester, and it continues to be a focus as I refine it to better align with the compositional idea and ensure the patches are as practical as possible.

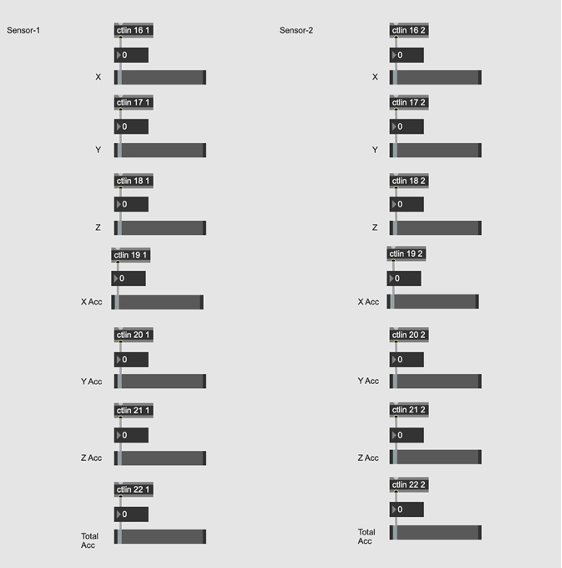

I started by mapping the sensors in Max MSP. Each sensor can be mapped to seven parameters using MIDI numbers, the 7th parameter is a measure of total acceleration obtained directly from the SOMI-1 receiver. It’s calculated using a Pythagorean formula based on acceleration data, excluding gravitational force to improve accuracy.

Figure I. Movement parameters of SOMI-1 around the X, Y, Z axis

What I learned from the mapping process is that even though I tried to be specific with the movements, generally you cannot isolate just one of these parameters while moving. This is a key difference I need to consider when mapping movement sensors compared to other stable

MIDI controllers. To effectively map the sensors and keep in mind the application of movements, I divided the motions into the 7 parameters for each sensor:

- Rotation X

- Rotation Y

- Rotation Z

- Acceleration X

- Acceleration Y

- Acceleration Z

- Total Acceleration

Figure II. An overview of sensor mapping in Max MSP

After completing the initial movement mapping, I began creating patches for the interaction part using the aforementioned list. This process is ongoing and will likely continue until the project’s completion. Meanwhile, a crucial aspect I am keen to focus on this summer is implementing patches to the sensors and testing their compatibility both independently and with the violin.

Throughout my learning, I am aware that due to the violin, my movement mapping is limited, and I am still trying to figure out how I can wear the sensors either on my hands or elsewhere, but it is also clear that the majority of the mapping is for the right hand or for the bowing part. However, there is also the possibility to map the sensors not only to the usual gestures that occur while playing the violin but also to some unconventional movements to trigger certain parameters, which would require more precise mapping in this case. In general, there are a few possibilities for the mapping process that I need to consider and examine thoroughly.

There are several types of mapping strategies that can be employed, regardless of whether the relationship between the control input and the parameter output is linear or non-linear:

One-to-One Mapping: This is the simplest form of mapping where each control or sensor input directly corresponds to a specific parameter or output

Multi-Parameter Mapping: This approach allows a single sensor input to influence several aspects of the sound to control multiple parameters simultaneously or sequentially. There is also this possibility to change the works of the sensors via pedal to have the combination of different tasks for the sensors

What I also have in mind is to avoid counter-intuitive mapping, which involves controlling parameters in unexpected ways, as it adds an element of unpredictability to the performance. I believe this is unnecessary for the project. Instead, my goal for the mapping part is to ensure that my movements have a clear and meaningful relationship with the parameters they control.