For my last Impulse, I want to talk about the feedbacks I gather from 3 different experts. This week I had to chance to talk about my thesis expose with Ursula Lagger, Mr. Horst Hörtner from Ars Electronica Futurelab, and Mr. Kaltenbrunner from the University of Art and Design in Linz.

Ms. Lagger focused on my methods and the clarity of my exposé. She advised me to decide whether interviews are necessary and to refine my research focus. Her feedback reminded me of the importance of making my approach as clear as possible.

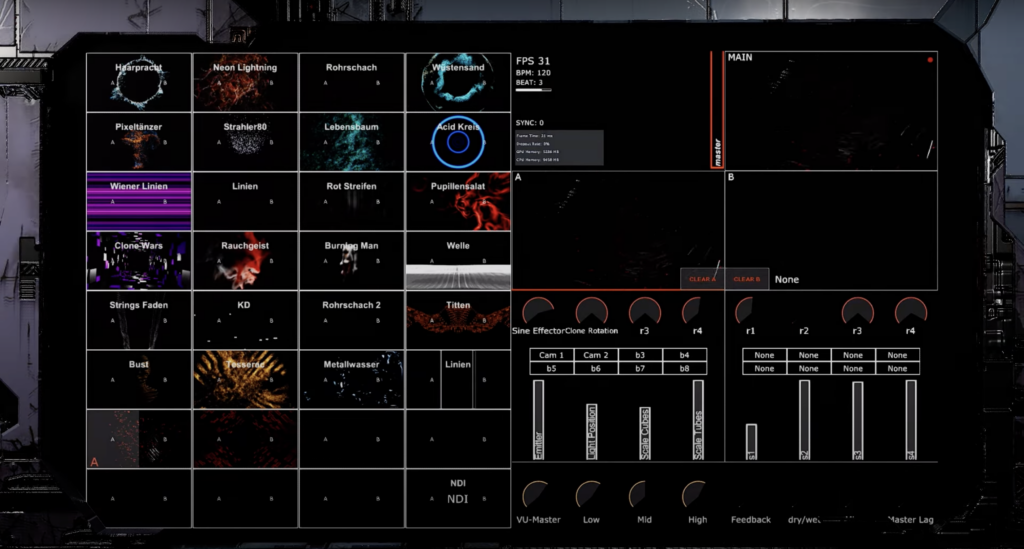

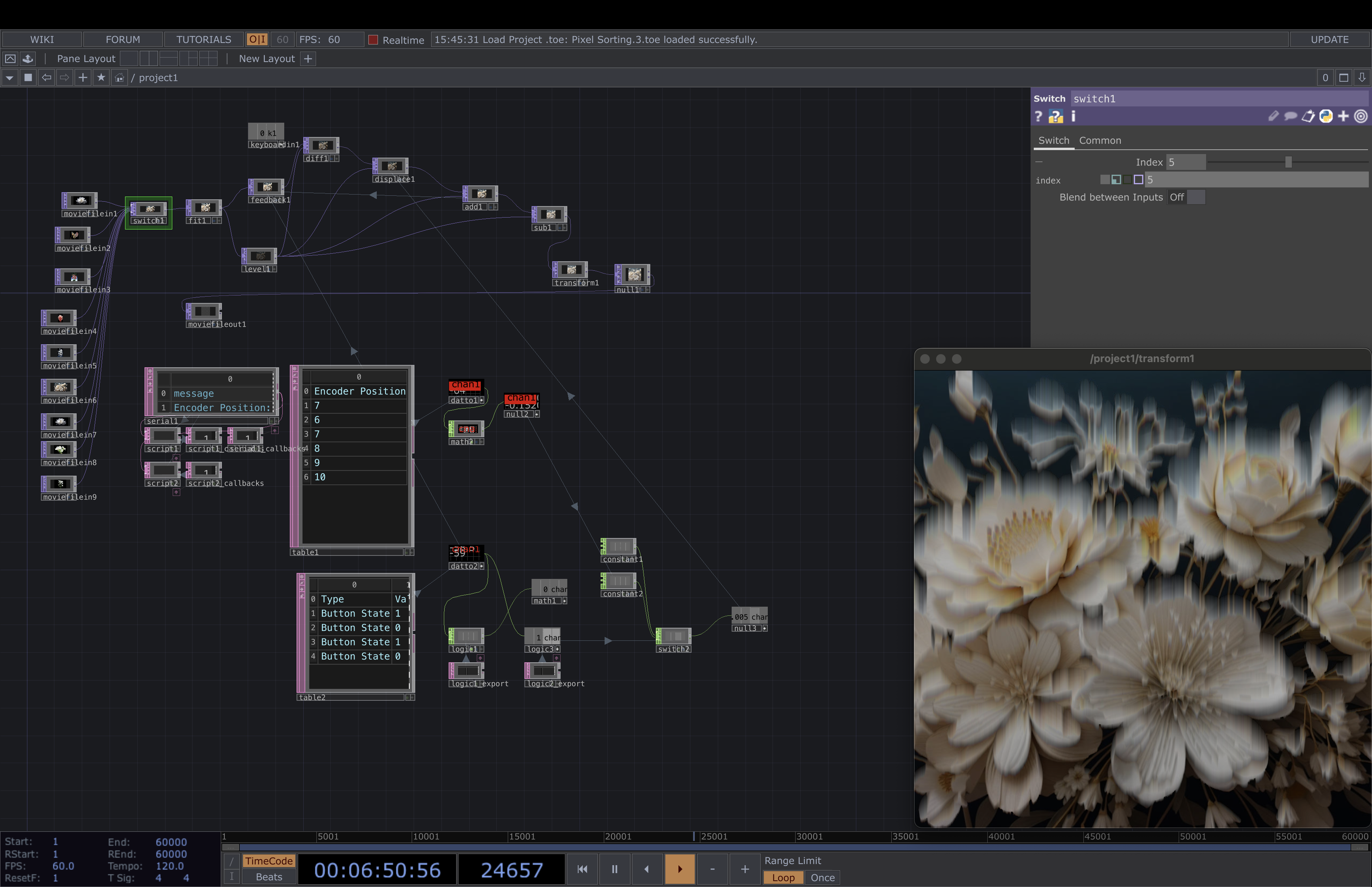

Mr. Hörtner gave me advice on presenting my work. After showing him my projects and portfolio, he suggested highlighting only three main projects that I want to pursue in the future. This helped me think about how to present my research in a more structured and impactful way.

Mr. Kaltenbrunner provided useful resources for my research and emphasized the importance of planning my installation. He suggested creating a draft, either in 3D or as a sketch, to clarify my concept. Since I already have experience with conceptual design, he encouraged me to refine my idea before moving into the production phase.

What I Learned

From these discussions, I learned that having a clear and focused exposé is crucial for my thesis. I also realized the importance of structuring my future projects and visually planning my installation before execution. These insights will help me stay organized and make my work more effective.

Conclusion

This feedback helped me see my next steps more clearly. Now, I will refine my thesis exposé, and start planning my thesis installation in a more structured way. Now, I feel more confident about how to move forward. These conversations really showed me the importance of balancing research with practical application!