In my last blog post, I showed you the new visuals and features. Today, I conducted user testing to find out how the visualizations are perceived. Even before completing the visualizations, I had concerns that using abstract visuals might not achieve my goal. However, due to time constraints, I had no other choice but to proceed with this approach.

My Assumptions Before User Testing

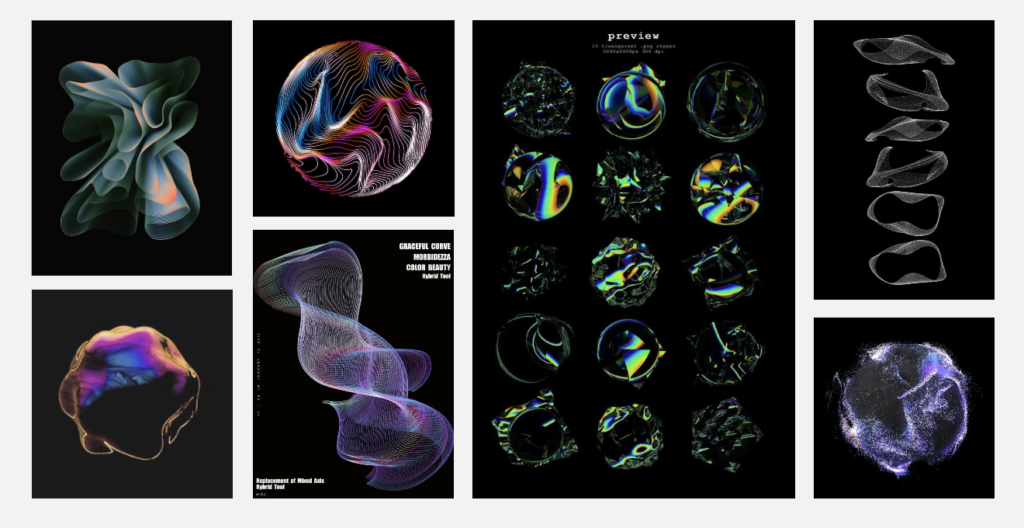

As mentioned before, while creating the visuals, I noticed that the topic of CO2 emissions is very complex. This is because the human eye cannot see the emissions and, therefore, cannot form a mental image of them. My current approach is to make these emissions more tangible using abstract visualizations, mainly particle systems. However, this abstract approach turned out to be incorrect. I realized that I had over-interpreted the visuals and expected too much from the users. I essentially lost sight of the users and went into the project with overly high expectations. The visuals I created cannot be interpreted correctly without prior knowledge and are therefore ineffective. The abstract representation is not the right way, and I need to return to the basics to create understandable visualizations.

User Testing

I asked a friend from my student dormitory to test the prototypes. The test subject is a 21-year-old male of German origin, studying psychology in his second semester. Before the testing, I introduced him to the topic and the prototype. Then, I asked him to input different values using the controller and interpret them for me. Here’s what came out of it:

Galaxy: The poor state of the galaxy was perceived as good because the representation was smaller than the neutral and positive states. The size played the main role, while the rotation force was in the background. However, the visualization was not meaningful.

Plasmasphere: Out of the three representations, the participant found the plasmasphere the most emotional. The different states were correctly perceived. The movements towards the camera looked threatening, making the participant think, „Oh no, what have I done?!“ The positive state was acceptable, but it would have been perceived as more peaceful without the red elements.

Flowers: Contextually, the flowers were the most appropriate, but the state changes were too extreme. If a solution could be found for this, this approach would probably be the best, even though the plasmasphere was more emotional.

Summary

In summary, the abstract approach to visualizing CO2 emissions was not effective. The user testing revealed that the visuals were often misinterpreted, highlighting the need for more straightforward and basic representations. This feedback is crucial for refining the visualizations to make them more accessible and understandable.