Schlagwort: Audio Reactive

🟦 09_Learnings & Next Steps

From exploring audio-reactive visuals in TouchDesigner to conceptualizing and designing a simple app prototype in Figma, it was so much fun! It all began with a curiosity about how different languages visually manifest through sound, leading me to experiment with various tools and techniques.

I am so happy that I dived into TouchDesigner a bit more I learned a lot about creating and manipulating visuals, especially making them respond to audio inputs which was very fun to learn. Also Along the way, I listened to the German alphabet countless times, which was unexpectedly useful (and quite amusing🤭)

Transitioning to Figma, I designed a mini prototype for a pronunciation visualizer app. This app allows users to select their native language and a language they wish to learn, providing real-time visual feedback on pronunciation patterns. The thing is, I had so many ideas for using the visuals in a practical application. Ultimately, I focused on perfecting the visuals, which was both challenging and rewarding.

Next Step

Looking ahead, I see a lot of potential for expanding this project. For example a Language Learning Kit with developing a comprehensive language learning kit using these types of visuals could make learning more engaging. Different visuals for different languages might be interesting. Or in the testing phase, it would be interesting to test the visuals with deaf individuals to see if they can detect patterns in each language. This could open up new ways of understanding and teaching languages. So I’m excited about the possibilities and let’s see if I will work on more on this project in the future!

🟦 07_Analysis of Outcome

After experimenting with AI voices and creating audio-reactive visuals for different languages, the next step is to analyze the outcomes in detail. Here’s how I approached the analysis and what I discovered.

Collecting and Comparing Data

First, I recorded of the visuals for each language and phrase under consistent conditions. By placing these visuals side by side, I could directly compare them. I looked for patterns, shapes, and movements unique to each language, paying special attention to how the visuals reacted to specific sounds, particularly vowels and consonants.

Observations and Differences

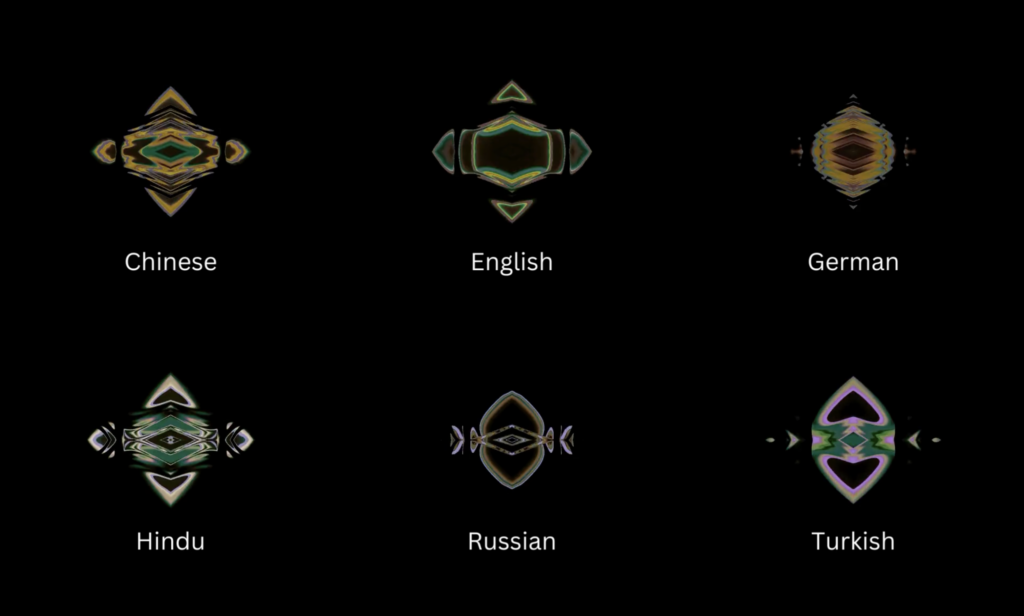

During my analysis, I noted some differences:

- German and English: Both languages produced clear, structured lines. However, German visuals had slightly more detailed patterns.

- Turkish: Turkish visuals showed more fluid and continuous lines, reflecting its smooth flow and connected structure.

- Russian: Russian visuals had a mix of sharp and smooth lines, showing its varied sounds and pronunciation.

- Hindi: Hindi visuals displayed intricate and dense patterns, likely due to its mix of different sounds.

- Chinese: Chinese visuals featured rhythmic, wave-like patterns, capturing the tones and unique structure of the language.

Here are some examples of the outcomes for „hello“ and „how are you?“:

- German: „Hallo, wie geht’s?“ – Clear and structured lines with some complexity.

- English: „Hello, how are you?“ – Similar to German but slightly less complex.

- Turkish: „Merhaba, nasılsın?“ – Fluid lines, continuous patterns.

- Russian: „Привет, как дела?“ – Mix of sharp and smooth lines.

- Hindi: „नमस्ते, आप कैसे हैं?“ – Dense, intricate patterns.

- Chinese: „你好, 你好吗?“ – Rhythmic, undulating patterns.

Next Step

Analyzing the audio-reactive visuals revealed subtle yet fascinating differences between languages. Each language’s phonetic characteristics influenced the visuals in unique ways. Moving forward, I want to gather some feedback from people around me where these visuals can be used and what they think about the visuals so far.